I’m not sure if this will be the last AP post I make, but it sure seems that way, unless people (including those of us who are members of the, you know, membership organization called College Board) put enough pressure on them to continue providing data in the detailed format like they've always done.

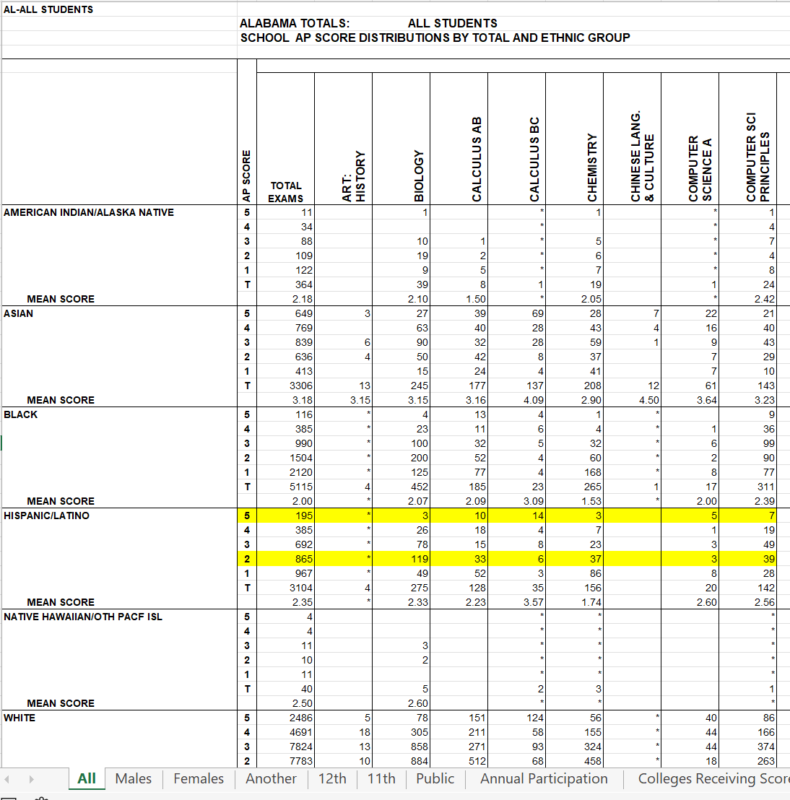

In case you don't know, College Board used to put very granular data on its website, for anyone to download and examine. You could look at data by state, by student ethnicity, by specific exam, and by AP score. For instance, this was a sample from one of the years showing the state of Alabama. You can see the data, and at the bottom, see other breakouts: Public schools, male/female, 11th/12th graders, etc. It was a gold mine of data, if you wanted to spend the time looking and digging and calculating. I've only scratched the surface in the three or four posts I've done on this blog, partly because the data are in spreadsheet format, and it takes a lot of cleanup to get it into useable shape for Tableau.

If you use Higher Ed Data Stories in your work at a college or a for-profit company or practice, consider supporting the costs of software, time, and web hosting by buying me a coffee at this link. If you're a high school counselor or you work at a CBO, please don't contribute; it's on me.

So anyway, I had taken a look at AP scores in 2019 and 2020 to see how they changed during COVID. It looked to me that scores had risen, which was probably not a problem in itself, for a lot of reasons:

- We were dealing with COVID, and there was major disruption everywhere, including schools, but of course things that happen in a student's home affect school, too

- The exams were converted to 45-minute, take-at-home formats. College Board assured us that they could predict a student's actual score with fewer and shorter questions, but I don't recall being shown that data. You just have to believe.

- The populations of students who took the test probably changed, too. Students with lower performance may have dropped out. Students from lower-income families might not have access to computers at the precise time they needed to test. It was suggested that if bandwidth at home was a problem, students could just take their exams in a parking lot of a McDonald's using the free wifi. There were some difficulties in the technology. too as students who completed the exam couldn't upload their results.

- The grading process may have been compromised in unintentional ways: Teachers may have been confused about the new format. And, during a time when we could all use a little grace and understanding and compassion, those things might have entered into the grading calculations, too. No one could fault people for giving kids the benefit of the doubt.

And no one could have blamed the College Board for the effects of the pandemic. Except in one area.

When College Board announced the new 45-minute exams, they said the colleges would accept them just like they accepted the results from previous years. Problem is, they didn't exactly ask the colleges, so once they announced it, it was unlikely that any college was going to unilaterally be the bad guy. It was a genius move from a strategy angle, of course. A stroke of strategic (if evil) genius, if you will.

College Board, of course, claims it did ask colleges, but it seems like the only ones it really asked were its trustees, who are, of course, trustees because they align philosophically with College Board. And the trustees helped out by making a video telling students that it was their responsibility to take the exams. Sweet.

Because the alternative would have been to cancel the tests. And College Board likes its revenue, so that wasn't going to be an option.

Because the alternative would have been to cancel the tests. And College Board likes its revenue, so that wasn't going to be an option.

But once the worst of COVID was over, I wanted to see if the 2021 scores had returned to some semblance of normal. I think all colleges have a right to this information, given that we grant academic credit--the coin of the realm in higher education--trusting that the exams measure and assess what they say they do. Without warning, College Board pulled the data off its website, and did not publish the granular data for inspection for 2021.

I complained about it on Twitter. Not only do colleges have a right to this, so do students, teachers, and parents, even if most will never look at it. And College Board is touting AP as a good substitute in a test-optional college admissions world as the SAT has taken a life-threatening blow, so more transparency is critical, I think. I re-tweeted it later. The whole time I was reminded of the frequent tweets of Akil Bello, pointing out David Coleman's claim of "transparency" in all they do.

One day this article by Jeremy Bauer-Wolf appeared, and it got a little attention (not enough to change minds at College Board, but that's another issue into and of itself). And I thought that was that. It wasn't.

Some right-leaning and even alt-right sites picked it up, and made it seem like the race issue was the big one. It wasn't, and in fact, I've visualized the data from prior years showing differences by ethnicity. It pissed me off, but I suppose that's where we are in America these days. So on the visualization below, you won't see any comparisons of scores by race.

The interesting thing is that if College Board had published the data, and I had visualized it, maybe 2,500 people would have seen it. As it is, I'm sure tens, if not hundreds of thousands of people have had the opportunity to think about this: About College Board's responsibility to members (colleges and high schools) and to students, not to mention society writ large.

So, after all that, the data, below, in six views, using the tabs across the top. The first view Changes 2018--2020 shows changes in volume by state from 2018 to 2019. If you hover over a state bar, you'll see a pop-up of the changes in that state by score distribution: The 5s increased by 2%, or the 3s were down 8%, for instance. Again, causality is a dangerous thing. Don't rush to judgements.

The second view, Scores and Household Income is for the racists. Nothing is labeled, so you can't tell which point shows which, but you should see the relationship between income and performance on exams. Chalk it up to race if you want, but it's not quite that simple. Income and ethnicity are tied together in America, and if you don't believe me, argue with the data. The filter will take you from national to state data if you wish. Actually, I encourage you to do so.

The third view, Income and Scores by State shows the same thing, broken out in more detail, for the skeptics. Again, no labels. Just look at the patterns.

The fourth view, Mean Scores by State, shows mean scores for 2018, 2019, and 2020 by state. You can filter to specific tests using the control at the top.

The fifth view, Changes in Mean Scores, shows the difference between means in 2018 and 2020. Use the controls to change the state or ethnicity if you'd like.

And finally, Participation by Ethnicity shows three years of exam volume by student race on the bars, and year-over-year percentage changes on the lines.

Source: Higher Ed Data Stories

The fourth view, Mean Scores by State, shows mean scores for 2018, 2019, and 2020 by state. You can filter to specific tests using the control at the top.

The fifth view, Changes in Mean Scores, shows the difference between means in 2018 and 2020. Use the controls to change the state or ethnicity if you'd like.

And finally, Participation by Ethnicity shows three years of exam volume by student race on the bars, and year-over-year percentage changes on the lines.

Source: Higher Ed Data Stories

What is the convention?

The convention came into force in 2018. In simple terms, state parties to the convention have to make arrangements to assess foreign qualifications from other party states (Article III of the convention).

Qualifications will be recognized “unless a substantial difference can be shown” (Article VI.1). “Qualifications” are defined broadly to include those obtained from pre-university (Article IV) and university (Article VI) education.

The convention deserves attention because it is one of the few that is already in force. By contrast, other recent conventions, such as the Global Convention on the Recognition of Qualifications concerning Higher Education in 2019, are not yet in force due to a lack of sufficient participating member states.

Even for those that are in force, they have other scopes, such as the Addis Ababa Convention in 2019 and the Lisbon Recognition Convention in 1999 which focus on the African and European regions respectively.

Qualifications will be recognized “unless a substantial difference can be shown” (Article VI.1). “Qualifications” are defined broadly to include those obtained from pre-university (Article IV) and university (Article VI) education.

The convention deserves attention because it is one of the few that is already in force. By contrast, other recent conventions, such as the Global Convention on the Recognition of Qualifications concerning Higher Education in 2019, are not yet in force due to a lack of sufficient participating member states.

Even for those that are in force, they have other scopes, such as the Addis Ababa Convention in 2019 and the Lisbon Recognition Convention in 1999 which focus on the African and European regions respectively.